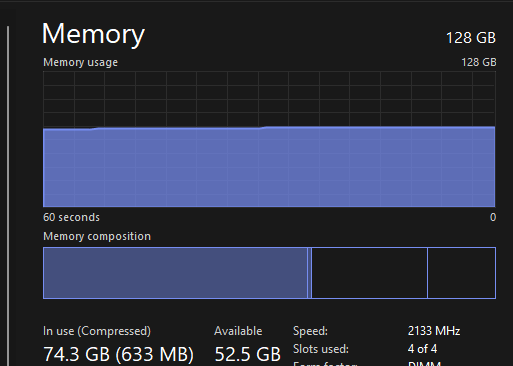

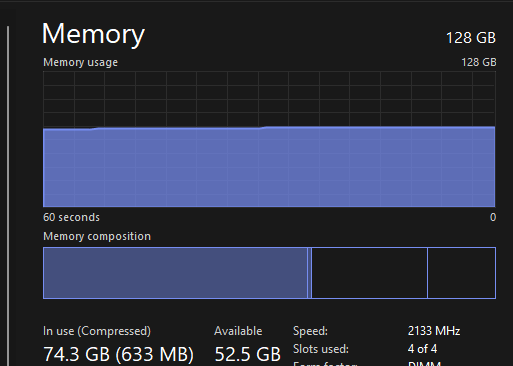

So I interested to trying to deploy latest release of VMware Cloud Foundation (VCF) 5.0 on my Windows 11 Home PC witch have 128GB and 16 core intel cpu.

William Lee wrote a nice artikel about VMware Cloud Foundation 5.0 running on Intel NUC

Disclaimer: This is not officially supported by VMware, please use at your own risk.

Requirements:

Setup

Virtual Machines

- DC02 (Domain Controller, DNS Server) (4GB 2vcpu)

- VCF-M01-ESX01 (ESXi 8.0 Update 1a) (64GBGB 1x140GB 2x600NVME 2x NIC) (Every Thin Provisiond)

- VCF-M01-CB01 (4GB and 4CPU) Only needed through First Deploment

Network settings on my PC

- 1 IP In my home network

- 172.16.12.1 (To Fool Cloudbuilder)

- 172.16.13.1 (To Fool Cloudbuilder)

Procedure:

Install en Configure ESXi

Step 1 – Boot up the ESXi installer from de iso mount and then perform a standard ESXi installation.

Step 2 – Once ESXi is up and running, you will need to minimally configure networking along with an FQDN (ensure proper DNS resolution), NTP and specify which SSD should be used for the vSAN capacity drive. You can use the DCUI to setup the initial networking but recommend switching to ESXi Shell afterwards and finish the require preparations steps as demonstrated in the following ESXCLI commands:

esxcli system ntp set -e true -s pool.ntp.org

esxcli system hostname set –fqdn vcf-m01-esx01.wardvissers.nl

Note: Use vdq -q command to query for the available disks for use with vSAN and ensure there are no partitions residing on the 600GB disks.

Don’t change time server pool.ntp.org.

To ensure that the self-signed TLS certificate that ESXi generates matches that of the FQDN that you had configured, we will need to regenerate the certificate and restart hostd for the changes to go into effect by running the following commands within ESXi Shell:

/bin/generate-certificates

/etc/init.d/hostd restart

Cloudbuilder Config

Step 3 – Deploy the VMware Cloud builder in a separate environment and wait for it to be accessible over the browser. Once CB is online, download the setup_vmware_cloud_builder_for_one_node_management_domain.sh setup script and transfer that to the CB system using the admin user account (root is disabled by default).

Step 4 – Switch to the root user and set the script to have the executable permission and run the script as shown below

su –

chmod +x setup_vmware_cloud_builder_for_one_node_management_domain.sh

./setup_vmware_cloud_builder_for_one_node_management_domain.sh

The script will take some time, especially as it converts the NSX OVA->OVF->OVA and if everything was configured successfully, you should see the same output as the screenshot above.

Step 4 – Download the example JSON deployment file vcf50-management-domain-example.json and and adjust the values based on your environment. In addition to changing the hostname/IP Addresses you will also need to replace all the FILL_ME_IN_VCF_*_LICENSE_KEY with valid VCF 5.0 license keys.

Step 5 – The VMnic in the Cloud Builder VM will acked als a 10GB NIC so I started the deployment not through powershell but normal way in Cloud Builder GUI.

Your deployment time will vary based on your physical resources but it should eventually complete with everything show success as shown in the screenshot below. (I have one retry for finish)

Here are some screenshots VCF 5.0 deployment running on my home PC.

Problems

Check this if you have problems logging in NSX:

https://www.wardvissers.nl/2023/07/26/nsx-endless-spinning-blue-cirle-after-login/

Next Steps.

1. Reploy with use of the Holo-Router https://core.vmware.com/resource/holo-toolkit-20-deploy-router#deploy-holo-router

2. Testing if can deploy Single Host VCF Workload Domain, on same way by following this blog post HERE! 😁

If I can start another 64GB ESXi Server.

Like this:

Like Loading...

You must be logged in to post a comment.