VMware is announcing vSphere 6.7, the latest release of the industry-leading virtualization and cloud platform. vSphere 6.7 is the efficient and secure platform for hybrid clouds, fueling digital transformation by delivering simple and efficient management at scale, comprehensive built-in security, a universal application platform, and seamless hybrid cloud experience.

vSphere 6.7 delivers key capabilities to enable IT organizations address the following notable trends that are putting new demands on their IT infrastructure:

- Explosive growth in quantity and variety of applications, from business critical apps to new intelligent workloads.

- Rapid growth of hybrid cloud environments and use cases.

- On-premises data centers growing and expanding globally, including at the Edge.

- Security of infrastructure and applications attaining paramount importance.

Let’s take a look at some of the key capabilities in vSphere 6.7:

Simple and Efficient Management, at Scale

vSphere 6.7 builds on the technological innovation delivered by vSphere 6.5, and elevates the customer experience to an entirely new level. It provides exceptional management simplicity, operational efficiency, and faster time to market, all at scale.

vSphere 6.7 delivers an exceptional experience for the user with an enhancedvCenter Server Appliance (vCSA). It introduces several new APIs that improve the efficiency and experience to deploy vCenter, to deploy multiple vCenters based on a template, to make management of vCenter Server Appliance significantly easier, as well as for backup and restore. It also significantly simplifies the vCenter Server topology through vCenter with embedded platform services controller in enhanced linked mode, enabling customers to link multiple vCenters and have seamless visibility across the environment without the need for an external platform services controller or load balancers.

vSphere 6.7 delivers an exceptional experience for the user with an enhancedvCenter Server Appliance (vCSA). It introduces several new APIs that improve the efficiency and experience to deploy vCenter, to deploy multiple vCenters based on a template, to make management of vCenter Server Appliance significantly easier, as well as for backup and restore. It also significantly simplifies the vCenter Server topology through vCenter with embedded platform services controller in enhanced linked mode, enabling customers to link multiple vCenters and have seamless visibility across the environment without the need for an external platform services controller or load balancers.

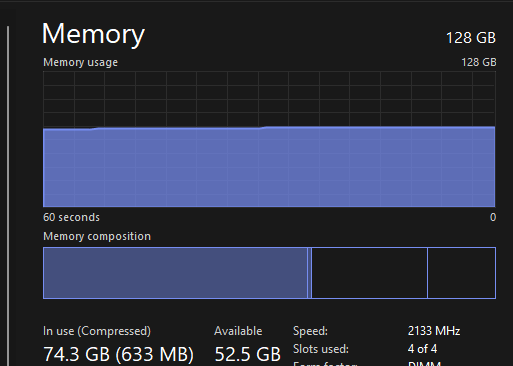

Moreover, with vSphere 6.7 vCSA delivers phenomenal performance improvements (all metrics compared at cluster scale limits, versus vSphere 6.5):

- 2X faster performance in vCenter operations per second

- 3X reduction in memory usage

- 3X faster DRS-related operations (e.g. power-on virtual machine)

These performance improvements ensure a blazing fast experience for vSphere users, and deliver significant value, as well as time and cost savings in a variety of use cases, such as VDI, Scale-out apps, Big Data, HPC, DevOps, distributed cloud native apps, etc.

vSphere 6.7 improves efficiency at scale when updating ESXi hosts, significantly reducing maintenance time by eliminating one of two reboots normally required for major version upgrades (Single Reboot). In addition to that, vSphere Quick Boot is a new innovation that restarts the ESXi hypervisor without rebooting the physical host, skipping time-consuming hardware initialization.

Another key component that allows vSphere 6.7 to deliver a simplified and efficient experience is the graphical user interface itself. The HTML5-based vSphere Client provides a modern user interface experience that is both responsive and easy to use. With vSphere 6.7, it includes added functionality to support not only the typical workflows customers need but also other key functionality like managing NSX, vSAN, VUM as well as third-party components.

Another key component that allows vSphere 6.7 to deliver a simplified and efficient experience is the graphical user interface itself. The HTML5-based vSphere Client provides a modern user interface experience that is both responsive and easy to use. With vSphere 6.7, it includes added functionality to support not only the typical workflows customers need but also other key functionality like managing NSX, vSAN, VUM as well as third-party components.

Comprehensive Built-In Security

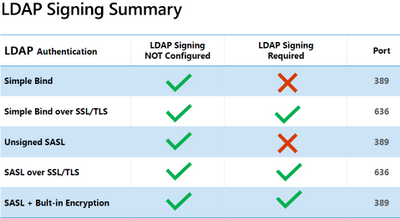

vSphere 6.7 builds on the security capabilities in vSphere 6.5 and leverages its unique position as the hypervisor to offer comprehensive security that starts at the core, via an operationally simple policy-driven model.

vSphere 6.7 adds support for Trusted Platform Module (TPM) 2.0 hardware devices and also introduces Virtual TPM 2.0, significantly enhancing protection and assuring integrity for both the hypervisor and the guest operating system. This capability helps prevent VMs and hosts from being tampered with, prevents the loading of unauthorized components and enables guest operating system security features security teams are asking for.

vSphere 6.7 adds support for Trusted Platform Module (TPM) 2.0 hardware devices and also introduces Virtual TPM 2.0, significantly enhancing protection and assuring integrity for both the hypervisor and the guest operating system. This capability helps prevent VMs and hosts from being tampered with, prevents the loading of unauthorized components and enables guest operating system security features security teams are asking for.

Data encryption was introduced with vSphere 6.5 and very well received. With vSphere 6.7, VM Encryption is further enhanced and more operationally simple to manage. vSphere 6.7 simplifies workflows for VM Encryption, designed to protect data at rest and in motion, making it as easy as a right-click while also increasing the security posture of encrypting the VM and giving the user a greater degree of control to protect against unauthorized data access.

vSphere 6.7 also enhances protection for data in motion by enabling encrypted vMotion across different vCenter instances as well as versions, making it easy to securely conduct data center migrations, move data across a hybrid cloud environment (between on-premises and public cloud), or across geographically distributed data centers.

vSphere 6.7 introduces support for the entire range of Microsoft’s Virtualization Based Security technologies. This is a result of close collaboration between VMware and Microsoft to ensure Windows VMs on vSphere support in-guest security features while continuing to run performant and secure on the vSphere platform.

vSphere 6.7 delivers comprehensive built-in security and is the heart of a secure SDDC. It has deep integration and works seamlessly with other VMware products such as vSAN, NSX and vRealize Suite to provide a complete security model for the data center.

Universal Application Platform

vSphere 6.7 is a universal application platform that supports new workloads (including 3D Graphics, Big Data, HPC, Machine Learning, In-Memory, and Cloud-Native) as well as existing mission critical applications. It also supports and leverages some of the latest hardware innovations in the industry, delivering exceptional performance for a variety of workloads.

vSphere 6.7 further enhances the support and capabilities introduced for GPUs through VMware’s collaboration with Nvidia, by virtualizing Nvidia GPUs even for non-VDI and non-general-purpose-computing use cases such as artificial intelligence, machine learning, big data and more. With enhancements to Nvidia GRID™ vGPU technology in vSphere 6.7, instead of having to power off workloads running on GPUs, customers can simply suspend and resume those VMs, allowing for better lifecycle management of the underlying host and significantly reducing disruption for end-users. VMware continues to invest in this area, with the goal of bringing the full vSphere experience to GPUs in future releases.

vSphere 6.7 continues to showcase VMware’s technological leadership and fruitful collaboration with our key partners by adding support for a key industry innovation poised to have a dramatic impact on the landscape, which is persistent memory. With vSphere Persistent Memory, customers using supported hardware modules, such as those available from Dell-EMC and HPE, can leverage them either as super-fast storage with high IOPS, or expose them to the guest operating system as non-volatile memory. This will significantly enhance performance of the OS as well as applications across a variety of use cases, making existing applications faster and more performant and enabling customers to create new high-performance applications that can leverage vSphere Persistent Memory.

vSphere 6.7 continues to showcase VMware’s technological leadership and fruitful collaboration with our key partners by adding support for a key industry innovation poised to have a dramatic impact on the landscape, which is persistent memory. With vSphere Persistent Memory, customers using supported hardware modules, such as those available from Dell-EMC and HPE, can leverage them either as super-fast storage with high IOPS, or expose them to the guest operating system as non-volatile memory. This will significantly enhance performance of the OS as well as applications across a variety of use cases, making existing applications faster and more performant and enabling customers to create new high-performance applications that can leverage vSphere Persistent Memory.

Seamless Hybrid Cloud Experience

With the fast adoption of vSphere-based public clouds through VMware Cloud Provider Program partners, VMware Cloud on AWS, as well as other public cloud providers, VMware is committed to delivering a seamless hybrid cloud experience for customers.

vSphere 6.7 introduces vCenter Server Hybrid Linked Mode, which makes it easy and simple for customers to have unified visibility and manageability across an on-premises vSphere environment running on one version and a vSphere-based public cloud environment, such as VMware Cloud on AWS, running on a different version of vSphere. This ensures that the fast pace of innovation and introduction of new capabilities in vSphere-based public clouds does not force the customer to constantly update and upgrade their on-premises vSphere environment.

vSphere 6.7 also introduces Cross-Cloud Cold and Hot Migration, further enhancing the ease of management across and enabling a seamless and non-disruptive hybrid cloud experience for customers.

vSphere 6.7 also introduces Cross-Cloud Cold and Hot Migration, further enhancing the ease of management across and enabling a seamless and non-disruptive hybrid cloud experience for customers.

As virtual machines migrate between different data centers or from an on-premises data center to the cloud and back, they likely move across different CPU types. vSphere 6.7 delivers a new capability that is key for the hybrid cloud, called Per-VM EVC. Per-VM EVC enables the EVC (Enhanced vMotion Compatibility) mode to become an attribute of the VM rather than the specific processor generation it happens to be booted on in the cluster. This allows for seamless migration across different CPUs by persisting the EVC mode per-VM during migrations across clusters and during power cycles.

Previously, vSphere 6.0 introduced provisioning between vCenter instances. This is often called “cross-vCenter provisioning.” The use of two vCenter instances introduces the possibility that the instances are on different release versions. vSphere 6.7 enables customers to use different vCenter versions while allowing cross-vCenter, mixed-version provisioning operations (vMotion, Full Clone and cold migrate) to continue seamlessly. This is especially useful for customers leveraging VMware Cloud on AWS as part of their hybrid cloud.

Learn More

As the ideal, efficient, secure universal platform for hybrid cloud, supporting new and existing applications, serving the needs of IT and the business, vSphere 6.7 reinforces your investment in VMware. vSphere 6.7 is one of the core components of VMware’s SDDC and a fundamental building block of your cloud strategy. With vSphere 6.7, you can now run, manage, connect, and secure your applications in a common operating environment, across your hybrid cloud.

This article only touched upon the key highlights of this release, but there are many more new features. To learn more about vSphere 6.7, please see the following resources.

Like this:

Like Loading...

Figure 1: Holodeck Nested Diagram

Figure 1: Holodeck Nested Diagram

vSphere 6.7 delivers an exceptional experience for the user with an enhancedvCenter Server Appliance (vCSA). It introduces several new APIs that improve the efficiency and experience to deploy vCenter, to deploy multiple vCenters based on a template, to make management of vCenter Server Appliance significantly easier, as well as for backup and restore. It also significantly simplifies the vCenter Server topology through vCenter with embedded platform services controller in enhanced linked mode, enabling customers to link multiple vCenters and have seamless visibility across the environment without the need for an external platform services controller or load balancers.

vSphere 6.7 delivers an exceptional experience for the user with an enhancedvCenter Server Appliance (vCSA). It introduces several new APIs that improve the efficiency and experience to deploy vCenter, to deploy multiple vCenters based on a template, to make management of vCenter Server Appliance significantly easier, as well as for backup and restore. It also significantly simplifies the vCenter Server topology through vCenter with embedded platform services controller in enhanced linked mode, enabling customers to link multiple vCenters and have seamless visibility across the environment without the need for an external platform services controller or load balancers. Another key component that allows vSphere 6.7 to deliver a simplified and efficient experience is the graphical user interface itself. The HTML5-based vSphere Client provides a modern user interface experience that is both responsive and easy to use. With vSphere 6.7, it includes added functionality to support not only the typical workflows customers need but also other key functionality like managing NSX, vSAN, VUM as well as third-party components.

Another key component that allows vSphere 6.7 to deliver a simplified and efficient experience is the graphical user interface itself. The HTML5-based vSphere Client provides a modern user interface experience that is both responsive and easy to use. With vSphere 6.7, it includes added functionality to support not only the typical workflows customers need but also other key functionality like managing NSX, vSAN, VUM as well as third-party components. vSphere 6.7 adds support for Trusted Platform Module (TPM) 2.0 hardware devices and also introduces Virtual TPM 2.0, significantly enhancing protection and assuring integrity for both the hypervisor and the guest operating system. This capability helps prevent VMs and hosts from being tampered with, prevents the loading of unauthorized components and enables guest operating system security features security teams are asking for.

vSphere 6.7 adds support for Trusted Platform Module (TPM) 2.0 hardware devices and also introduces Virtual TPM 2.0, significantly enhancing protection and assuring integrity for both the hypervisor and the guest operating system. This capability helps prevent VMs and hosts from being tampered with, prevents the loading of unauthorized components and enables guest operating system security features security teams are asking for. vSphere 6.7 continues to showcase VMware’s technological leadership and fruitful collaboration with our key partners by adding support for a key industry innovation poised to have a dramatic impact on the landscape, which is persistent memory. With vSphere Persistent Memory, customers using supported hardware modules, such as those available from Dell-EMC and HPE, can leverage them either as super-fast storage with high IOPS, or expose them to the guest operating system as non-volatile memory. This will significantly enhance performance of the OS as well as applications across a variety of use cases, making existing applications faster and more performant and enabling customers to create new high-performance applications that can leverage vSphere Persistent Memory.

vSphere 6.7 continues to showcase VMware’s technological leadership and fruitful collaboration with our key partners by adding support for a key industry innovation poised to have a dramatic impact on the landscape, which is persistent memory. With vSphere Persistent Memory, customers using supported hardware modules, such as those available from Dell-EMC and HPE, can leverage them either as super-fast storage with high IOPS, or expose them to the guest operating system as non-volatile memory. This will significantly enhance performance of the OS as well as applications across a variety of use cases, making existing applications faster and more performant and enabling customers to create new high-performance applications that can leverage vSphere Persistent Memory. vSphere 6.7 also introduces Cross-Cloud Cold and Hot Migration, further enhancing the ease of management across and enabling a seamless and non-disruptive hybrid cloud experience for customers.

vSphere 6.7 also introduces Cross-Cloud Cold and Hot Migration, further enhancing the ease of management across and enabling a seamless and non-disruptive hybrid cloud experience for customers.

You must be logged in to post a comment.